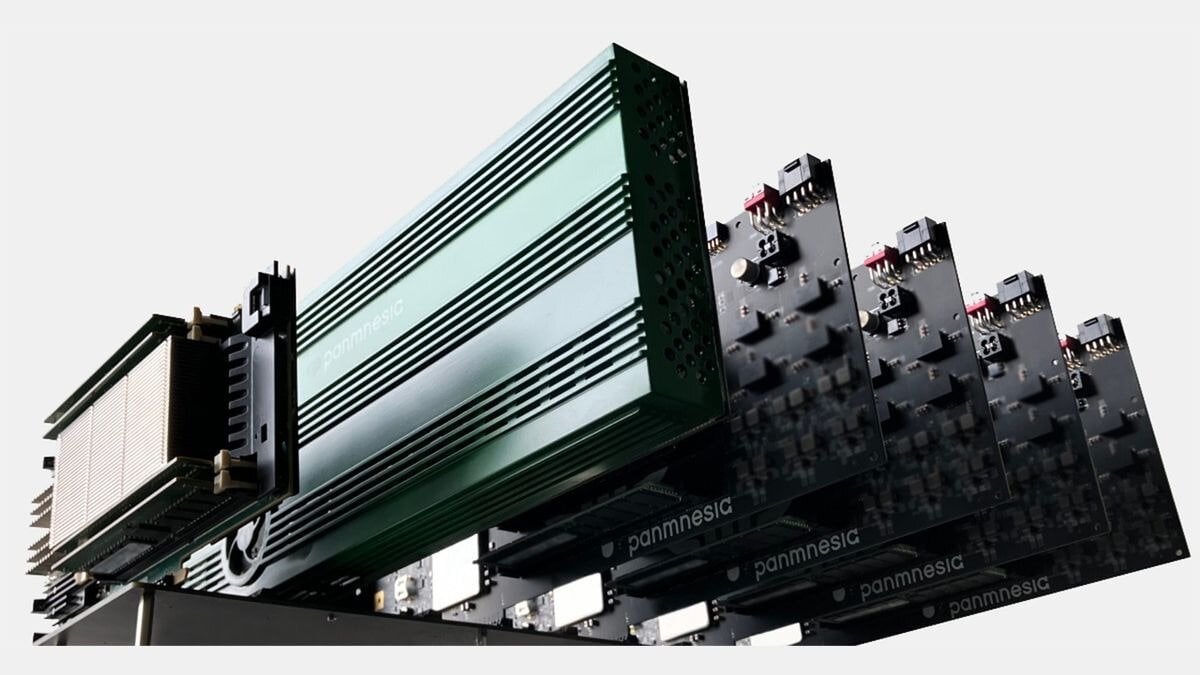

Modern GPUs for AI and HPC applications rely on onboard HBM (high-bandwidth memory), which limits their performance when handling AI and other workloads. But a new technology will allow companies to expand the memory capacity of GPUs by taking advantage of additional memory via PCIe-connected devices, rather than just the onboard memory of the GPU. There is also the possibility of using SSDs to expand the memory. Panmnesia, a company backed by South Korea’s renowned KAIST research institute, Developed CXL IP Low Latencywhich can be used to expand the GPU memory, via CXL memory expansion cards.

The memory requirements for the more sophisticated datasets used to train AI models are rapidly increasing, meaning companies in this space are forced to purchase new GPUs, use less sophisticated datasets, or instead use CPU memory, which limits system performance. While CXL is a protocol that typically runs over a PCIe link, allowing users to connect more memory to the system via a PCIe port, the technology must be recognized by the ASIC and its subsystem, so adding a CXL controller alone is not enough to make the technology work, especially in the case of a GPU.

Panmnesia faced several challenges in integrating the CXL protocol for GPU memory expansion, as there is no logical CXL substrate and corresponding subsystems that support the use of DRAM and/or SSD endpoints on GPUs. Additionally, the GPU cache and memory subsystems do not recognize any expansion other than the Unified Virtual Memory (UVM), which tends to be slow.

To address this issue, Panmnesia has developed a CXL 3.1-compatible RC (Root Compiler) equipped with multiple Root Ports (RP) that support external memory over PCIe, as well as a socket bridge with an HDM decoder that communicates with the GPU’s system bus. The HDM decoder, which is responsible for managing the system memory address range, essentially tricks the GPU’s memory subsystem into “thinking” it is being called to manage system memory, when in fact the subsystem is taking advantage of some PCIe-connected DRAM or NAND. This means that DDR5 memory or SSDs can be used to expand the total memory available to the GPU.

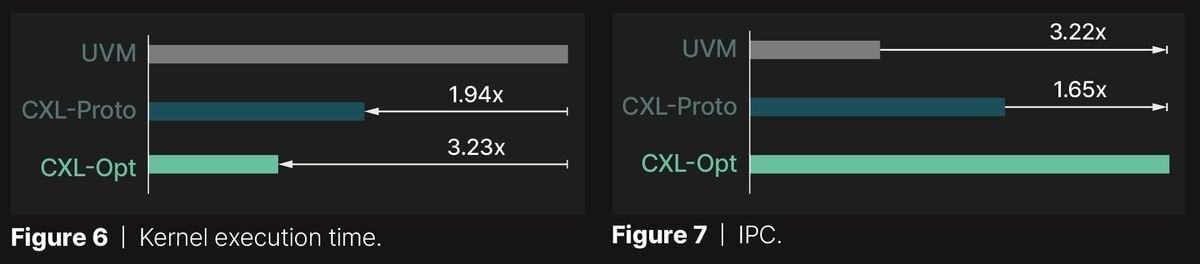

The solution (based on a custom GPU, shown as CXL-Opt in the chart below) has been thoroughly tested and recorded a double-digit nanosecond response latency (compared to 250 nanoseconds in the case of the prototypes developed by Samsung and Meta, shown as CXL-Proto), including the time it takes for the protocol to transition between normal memory operations and using CXL, according to Panmnesia. The system has been successfully integrated into both memory expansions and GPU/CPU prototypes, demonstrating its compatibility with various forms of hardware.

In the Panmnesia tests, UVM performed worse than all GPU cores tested, due to latency caused by page faults and page-level data transfers, which often exceed the GPU’s needs. Instead, CXL allows direct access to extended storage media via load/save commands, eliminating the above issues.

Thus, CXL-Proto runtimes are 1.94x faster than UVM runtimes. Panmnesia’s CXL-Opt reduces execution time by a factor of 1.66, with an optimized controller that achieves double-digit nanosecond latency while reducing read/write latency. This pattern is also reflected in another dataset, showing IPC values recorded while running GPU cores. Panmnesia’s CXL-Opt turns out to be 3.22x and 1.65x faster than UVM and CXL-Proto, respectively.

Overall, CXL support could greatly help GPUs in AI and high-performance computing tasks, but performance remains a major issue. Moreover, it remains to be seen whether companies like AMD and Nvidia will decide to include CXL support in their GPUs. And if the technology gains momentum, it is not certain whether market giants will use IP blocks from companies like Panmnesia or simply develop their own technology.

-

1

“Total alcohol fanatic. Coffee junkie. Amateur twitter evangelist. Wannabe zombie enthusiast.”

More Stories

Is this what the PS5 Pro will look like? (Image)

Finally, Windows 11 24H2 update significantly boosts AMD Ryzen – Windows 11 performance

Heart Surgeon Reveals The 4 Things He ‘Totally Avoids’ In His Life