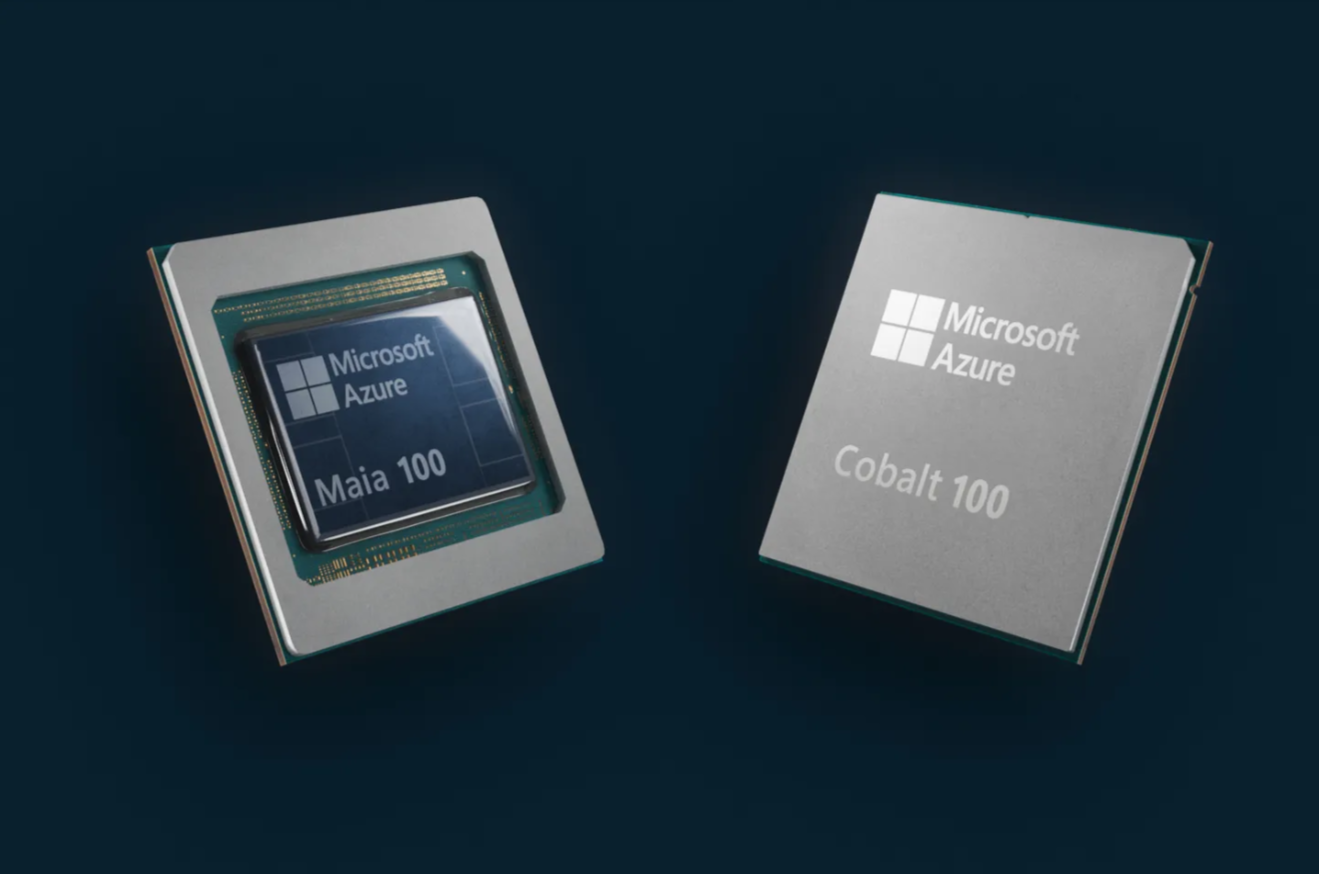

Microsoft unveiled two new microchips of its own design at the Ignite conference in Seattle. The first chip, the Maia 100, is intended for AI applications and could compete with offerings from Nvidia, which now dominates microchips in this category.

The second is the Cobalt 100, a small chip of the Arm architecture, which is designed for general use and can theoretically compete with Intel’s proposals, which together with AMD dominate processor applications for home and business computers.

OpenAI is making plans for its own AI chips

As CNBC notes, highly liquid technology companies are starting to give their customers more options for the cloud infrastructure they can use to run applications. Companies like Alibaba, Amazon, and Google have been doing this for years.

Microsoft, which had about $144 billion in cash at the end of October, had a cloud market share of 21.5% in 2022, behind only Amazon, according to Gartner estimates.

Virtual machines running on Cobalt microchips will become commercially available through Microsoft’s Azure cloud in 2024, Microsoft senior vice president Rani Borkar told CNBC, while he did not provide a timeline for the Maia 100 microchip.

The competition

Google announced the initial version of its AI processor, Tensor, in 2016. Accordingly, Amazon Web Services unveiled the Arm-based Graviton microchip and the Inferentia AI processor in 2018, while it announced Trainium, for education models, in 2020.

Special AI chips from cloud providers may help meet demand when there is a shortage of GPUs. But Microsoft and other cloud computing companies don’t plan to let companies buy servers containing their chips, unlike Nvidia or AMD.

Borkar explained that the company built its AI computing chip based on customer feedback.

Prospect

Microsoft is testing how Maia 100 can meet the needs of Bing’s search engine chatbot, GitHub’s Copilot coding assistant, and GPT-3.5-Turbo, a large OpenAI language model supported by Microsoft, Borkar said. OpenAI has fed its language models large amounts of information from the Internet, and can compose emails, summarize documents, and answer questions.

The GPT-3.5-Turbo model runs on OpenAI’s ChatGPT helper, which became popular after its release last year. Companies then moved quickly to add similar chat capabilities to their software, driving demand for GPUs.

“We work in all areas and… [με] “All of our different suppliers have to help improve our supply situation and support our many customers and the demand they have in front of us,” Nvidia CFO Colette Kress said at the Evercore conference in New York in September.

OpenAI previously trained models on Nvidia GPUs in Azure.

“Avid problem solver. Extreme social media junkie. Beer buff. Coffee guru. Internet geek. Travel ninja.”

More Stories

In Greece Porsche 911 50th Anniversary – How much does it cost?

PS Plus: With a free Harry Potter game, the new season begins on the service

Sony set to unveil PS5 Pro before holiday season – Playstation